Ai can't replace a programmer.

Coding with chatGPT - dev log #1

When chatGPT broke free into the wild world, after watching a couple explainer videos, and after watching some more videos showing the future of GPT, I'm not gonna lie, I imagined things like the browser no longer being needed, as anything you might need, whether it be information, booking a hotel or ordering online could (will be) doable from an AI's interface.

Then I sat down and started prompting chatGPT

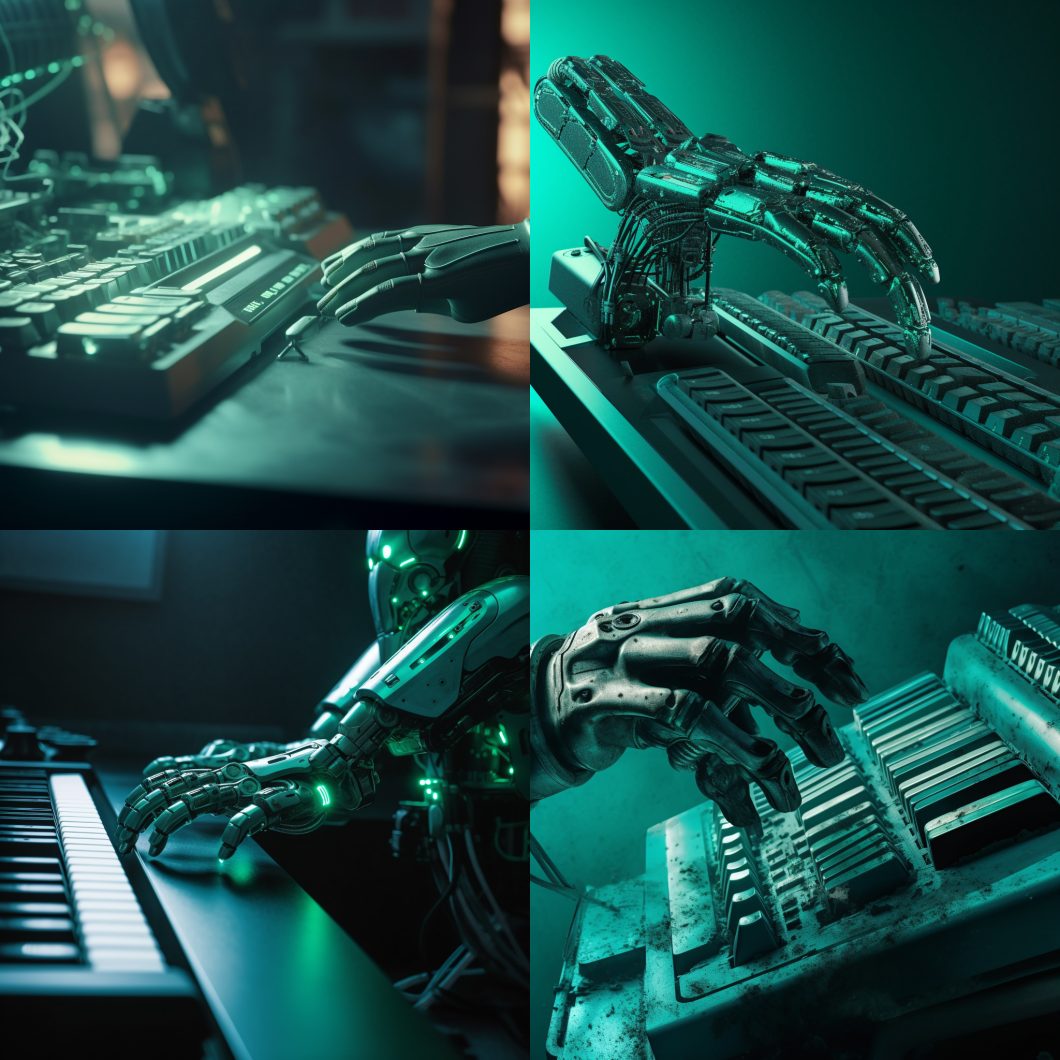

P.S. All images where created by Midjourney, using my prompts

Language Models have really gotten far!

As a chatBot, chatGPT is amazing. I can see why it gained 100M monthly users in 2 months. All predecessor chatBots where exactly that bots. They reacted to specific keywords and underneath was just a bunch of 'if' statements. Yes, yes ... neural networks, that are the foundation for AI are also just a bunch of 'if' statements, on steroids, strapped to a rocket, a couple million times larger and smarter. So after a month of playing around with various AI's these are my first conclusions...

chatGPT 3.5 is bad at code!

Well at least for me. My stack is in the frontend: Vue, but more specifically Nuxt. Both Vue and Nuxt have had major jumps to version 3 in the last year, so finding documentation is hard, because some information is still in the v2 docs, some in v3. So I thought GPT might be better at it.

Spoiler alert, it's not!

Sure, simple functions and concepts are generated just fine, but when you instruct GPT 3.5 to use a specific context, plugins or style, it'll use it in maybe 1 or 2 answers and afterwards ignores the base contexr. I constantly had to remind GPT that I want my answers in composition API and that I use day.js for dates.

My whole experience felt like holding a junior devs hand, sure they gets some thing right, but in slightly more advanced instructions starts making up things, and you have to constantly correct them. With my patience I just got fed up and did the good ol' "I'll do it myself"

"What about Co-Pilot?"

I assumed co-pilot from Github would be better, seeing as it's embedded in the IDE you use. I asked some colleagues how it works, and if it understands the context of the whole project. I got a negative answer. Some even said that it's just GPT underneath. So sure, it can generate a couple versions of a function, but without having the entire context of a projects, the 3rd party plugins and modules you use it'll never generate an answer good enough for you to just copy paste directly into the codebase.

Enter GPT-4

I got access to GPT-4 and once again started with listing my stack, preferred style and some key modules/plugins I use. I was pleasantly surprised. This version of the model fully understood the context, and kept if for ... still holds it till today.

chatGPT 4 helped me solve some middleware, helped optimise a couple components (albeit I had to copy paste the entire component into the chat window). Finally I felt I have an assistant better than Google or Stackoverflow, which had the answers I needed, or worst case scenario the direction to head into I needed. There still is a long way to go, but as soon as an AI will understand the entire scope of the project that's when it will fully shine.

There is one thing that caught my attention though, and was a bit worrisome.

"When AI started altering my vision."

Since I was very happy with most of the suggestions chatGPT gave when presented with my code, I implemented about 50% of the AI's optimisations. Some things I skipped because it was too abstract and lost readability, or just got mixed up ... small stuff.

But during optimising another one of my components it suddenly decided to remove a button for triggering validation. This was a simple form and there was a button at the end that verified and submitted the data. The AI opted for an "on the fly" validation and decided to "watch" for any changes in the fields. Which is OK, not exactly performant, and UX-wise you don't want to harass users about incorrectly filled fields on any keystroke.

I confronted the AI, stating that I need that button and asked why it was removed. To which it apologised and then made me chuckle because its response was: "I see no other optimalisation in this code".

Even though chatGPT 4 is miles better than the previous model, you still need the human touch. Should IT companies start reducing their developers and leave 1 man armies geared with AI to write code? No. As you can see above, even if the next iterations of models become better at code, at context, at optimising you will still need the human touch that pulls the last strings.

My 2 favorite quotes for AI are: "AI will do 90% of the work, you just need to refine it", "AI is an amazing tool. It feels like moving from candlelight to electric light bulb"